Introducing the Best AI Image Generator: FLUX Model's Breakthrough Enhancements

4/17/2025

For more cutting-edge AI technology news, please follow us: 【closerAI ComfyUI】. The FLUX ecosystem has entered the era of second-level output! The most powerful accelerator, nunchaku, has been tested: generating images in one second with memory use reduced by three times! It has become a productivity tool.

For more cutting-edge AI technology news, please follow us: 【closerAI ComfyUI】. The FLUX ecosystem has entered the era of second-level output! The most powerful accelerator, nunchaku, has been tested: generating images in one second with memory use reduced by three times! It has become a productivity tool.

Hello everyone, I'm Jimmy. In this edition, I am introducing an explosive project, the FLUX model. We all know it requires a lot of memory; running it for text-to-image or image-to-image tasks with some optimizations could take around 1.5 to 2 minutes with my 8GB graphics card, and that's without adding LORA, fill, redux, or other controls. When additional controls are implemented, the time required increases further. Although the quality of the generated images is better than other models, the longer generation time is a significant downside. While there are enhanced acceleration solutions like teacha and sagettention, they still fall short of being true productivity tools in terms of efficiency.

Now, a new technological direction has emerged—the 4-bit diffusion model inference engine, nunchaku. The recent version 0.2 has propelled FLUX to generate images at a speed of one second, and the quality of the images is nearly identical to that of the original model.

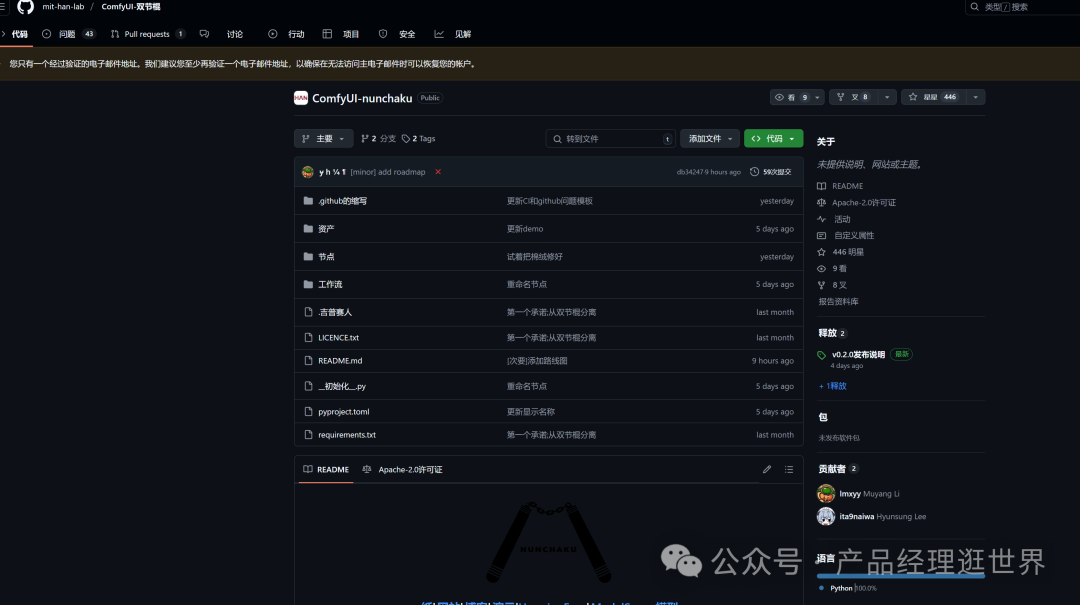

ComfyUI-nunchaku Introduction

Nunchaku is a 4-bit diffusion model inference engine developed by MIT Han Lab, specifically designed to optimize generative AI models like Stable Diffusion and Flux. Its core technological breakthroughs include:

-

SVDQuant Quantization Technology: By employing Singular Value Decomposition (SVD) and Kernel Fusion, model weights and activation values are compressed to 4 bits, reducing memory usage by 3.6 times (e.g., a 16GB graphics card can run the originally required 50GB Flux.1-dev model). This solves the traditional issue of image blurriness with 4-bit quantization, achieving an LPIPS quality index of only 0.326 (close to the original 0.573), which is visually indistinguishable.

-

Multi-modal Ecosystem Compatibility: Perfect support for Flux models, LoRA, ControlNet, and multi-GPU architectures (NVIDIA Ampere/Ada/A100). Enhancements for text-to-image, ControlNet repainting, repairs, etc. yield an 8.7 times speed increase.

-

Hardware-level Optimization: Optimized for the NVIDIA CUDA architecture, supporting FP16/FP8 mixed-precision computation, achieving image generation in just 3 seconds on a 16GB graphics card (compared to the original 111 seconds).

ComfyUI Node: https://github.com/mit-han-lab/ComfyUI-nunchaku

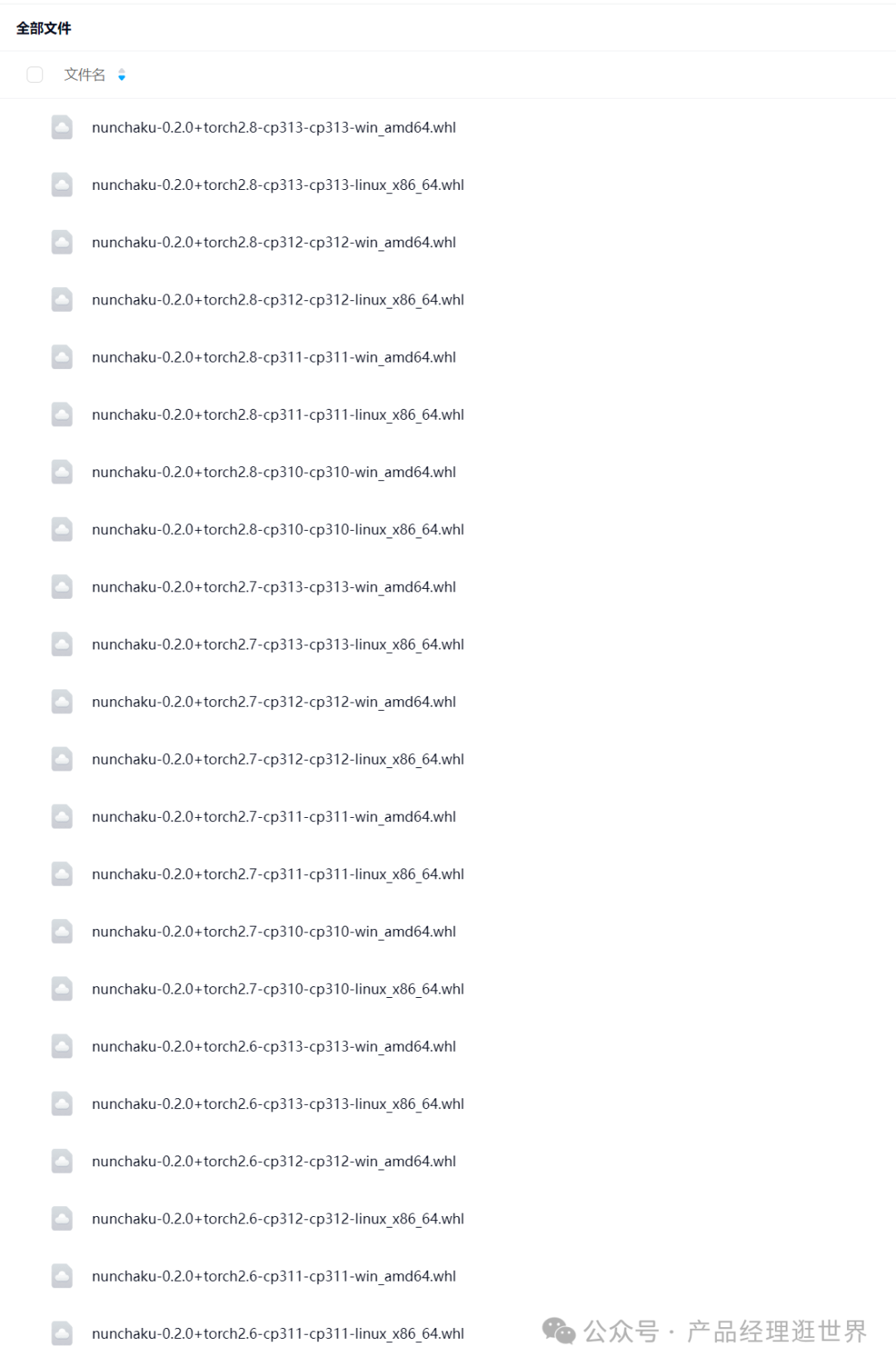

Wheel Address: https://huggingface.co/mit-han-lab/nunchaku/tree/main

Version 0.2.0 released on April 5! This version introduces multi-LoRA and ControlNet support, enhancing performance with FP16 attention and First-Block Cache. Additionally, it adds compatibility for 20 series GPUs to FLUX.1-redux.

Installation

-

Download the node and place it in

comfyUI/custom_nodes. -

Install the wheel from the above address using PIP. Make sure to have PyTorch version 2.5 or later; if your version is insufficient, please upgrade and back up your Python packages in advance as a precaution. Download the wheel matching your version.

-

Download the model and place it in

comfyUI/models/unet

Restart ComfyUI Experience and Optimization Plan

While its node package contains workflows, directly using its workflow for generating images may result in unstable quality; you might feel it is comparatively inferior. Additionally, loading the models takes some time, but it is within an acceptable range. Once the model is loaded, generating images after drawing cards or modifying parameters becomes extremely fast.

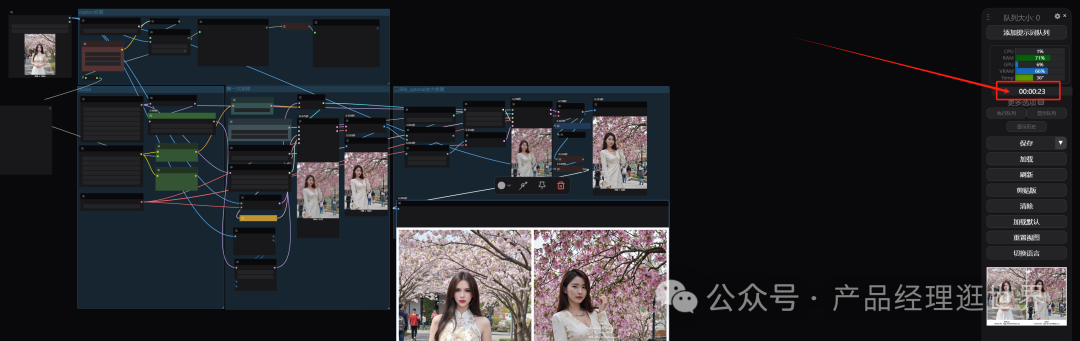

Using the optimized workflow, I generated images in 23 seconds. There’s not much to say about its workflow since it's 4 times faster than FLUX, with comparable quality. However, because it’s accelerated and a 4-bit quantized model, there is some loss, but it’s minimal. Here, I mainly want to share some insights and thoughts on our optimization strategies for your reference. Previously, we introduced the magnification repair plan for FLUX: 【closerAI ComfyUI】Strong! New ideas for magnification and repair! A tool that balances efficiency and quality. The essential high-definition magnification scheme necessary for generating FLUX images must be learned!

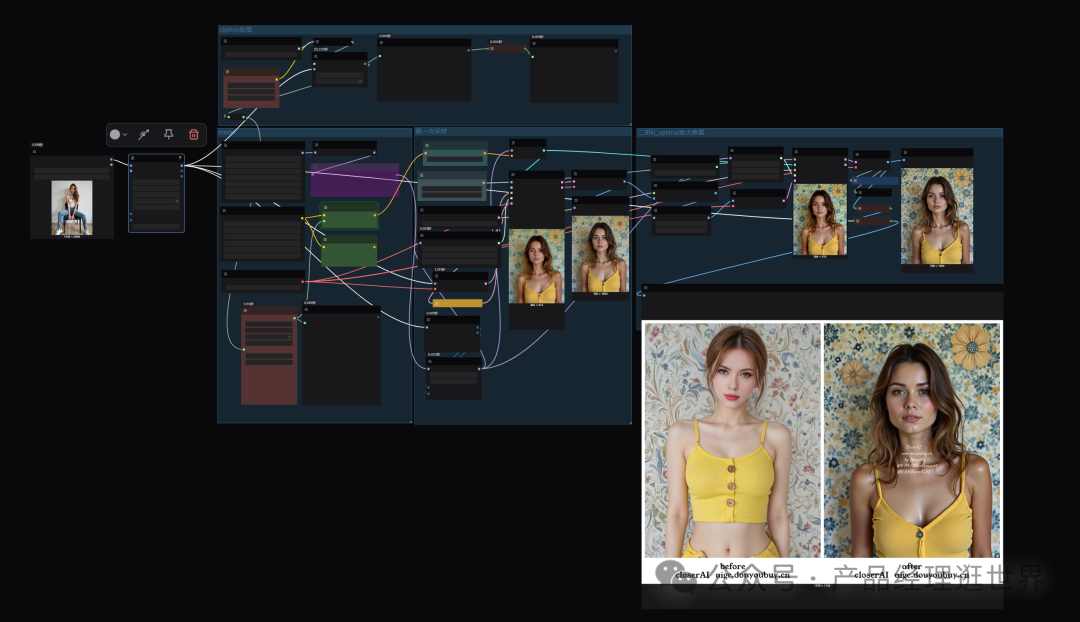

The key insight is combining the scheduler kl_optimal's high-definition repair approach with nunchaku. We at closerAI built a super-accelerated image generation workflow based on this integration, as shown in the figure below:

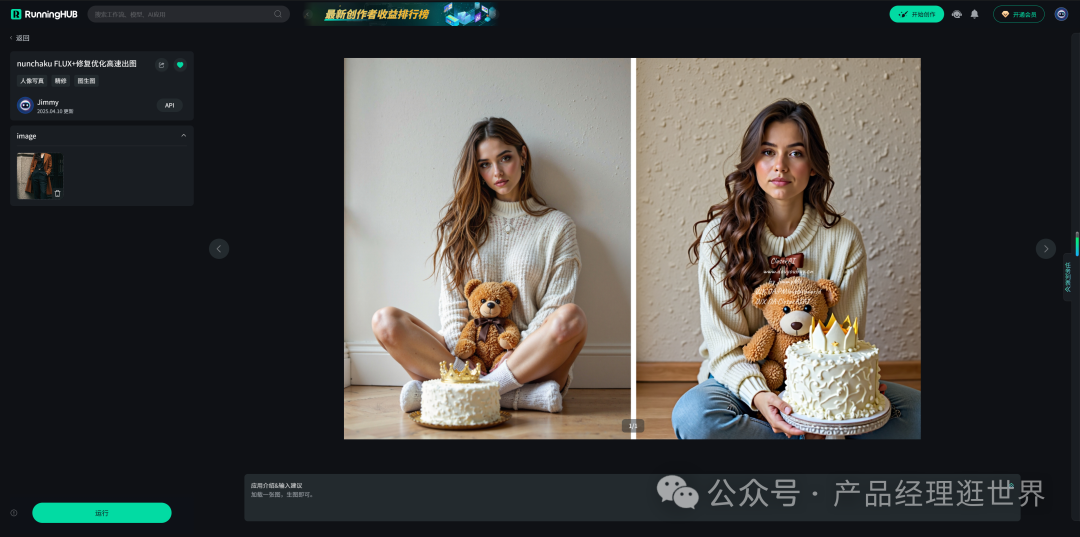

The above images are generated based only on the original image size and style references, without any magnification or LORA integration. Our workflow thoughts are as follows:

-

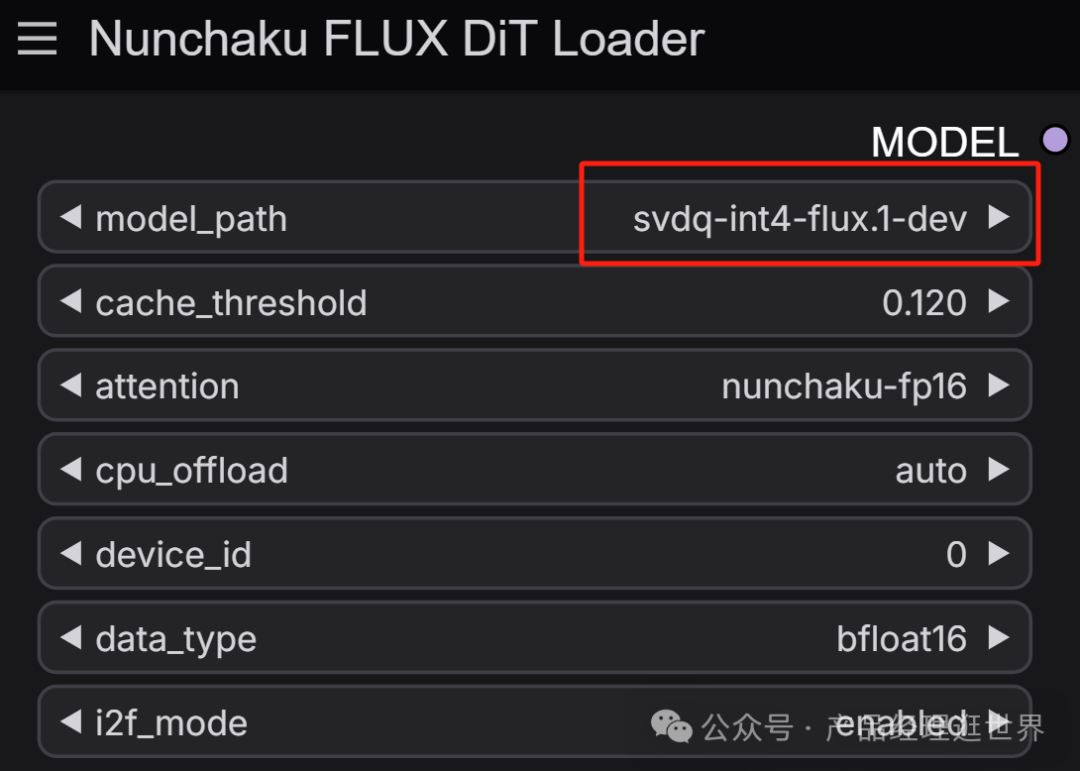

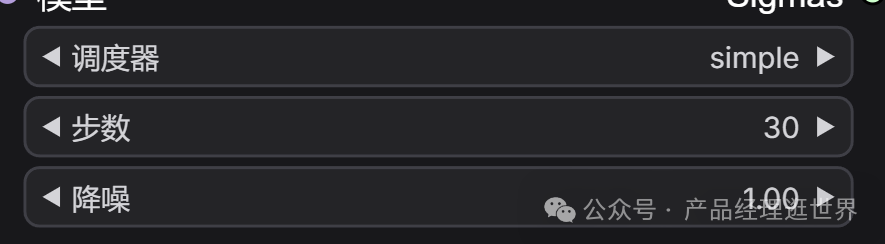

Use the nunchaku FLUX basic text-to-image workflow for the first sampling. Here, we employ the SVDQ-int4-flux.1-dev model, with other reference settings as shown in the figure below:

-

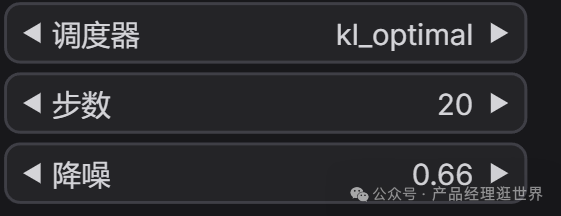

Integrate with kl_optimal for second sampling.

What if your local computational power is insufficient?

For friends with limited local computational power, we recommend using online ComfyUI for your experience: runninghub.cn

Nunchaku FLUX workflow experience address: https://www.runninghub.cn/ai-detail/1910201953526448130

Registration address: https://www.runninghub.cn/?utm_source=kol01-RH151

Final Thoughts

The advantages of nunchaku FLUX include ultra-fast image generation, which resolves the slow generation issue with FLUX. Moreover, its ecosystem is gradually improving and supports FLUX's fill, redux, ControlNet, and multi-LoRA, making it a potential substitute for FLUX in image generation tasks. Highly recommended for use. As a productivity tool, it is entirely sufficient for generating base images.

This has been an introduction to the stable diffusion ComfyUI built by the closerAI team and the FLUX nunchaku super-accelerated image generation workflow. You can try building your workflow based on these ideas. Additionally, you can also obtain corresponding workflows on our closerAI membership site.

Thank you for reading my article! If you found it helpful, please give it a thumbs up, and if you'd like to receive updates promptly, feel free to star my work ⭐. See you next time!

Our awesome Reviews

Unleashing Creativity with FLUX Kontext: The Best AI Image Generator for Seamless Edits

This article explores FLUX Kontext, an advanced generative model for AI image editing that retains the integrity of unedited areas while allowing detailed modifications.

Introducing FLUX.1 Kontext: The Best AI Image Generator for Image Creation and Editing

FLUX.1 Kontext model by Black Forest Labs revolutionizes AI image generation and editing with its innovative contextual capabilities.

Best AI Image Generator: Exploring the Power of Qwen3

This article delves into the capabilities of Qwen3, an AI image generation model that offers impressive performance across several configurations.

Discover the Best AI Image Generator: HiDream's Advancements in Image Creation

HiDream's innovative AI image generation models, HiDream-I1 and HiDream-E1, are capturing global interest with their advanced features and capabilities.

Introducing Vidu Q1: The Best AI Image Generator for Stunning Videos

Vidu Q1 is a revolutionary AI model that generates high-quality 1080P videos from text or images, enhancing content creation with smart audio effects.

Best AI Image Generator: Create Stunning Images with GPT-4o

A guide on using GPT-4o to create realistic images with simple prompts, showcasing the ease of AI-driven art generation.

Discover the Best AI Image Generator: Create Stunning Ghibli Style Art with Liblib AI

Explore how to effortlessly create enchanting Ghibli-style AI-generated images using Liblib AI and DeepSeek.

Unlocking Creativity with the Best AI Image Generator: A Look at Free Tools

An insightful review of two free AI image generation tools that offer high quality at no cost.

Introducing the Best AI Image Generator: CatPony - A Stunning Realistic Model

Explore the outstanding CatPony model featuring stunning realism and intricate details, perfect for AI image generation.

Discover the Best AI Image Generator: Create Adorable GPT-4o Figures with Ease

This article introduces two platforms to generate adorable GPT-4o figurines. Follow the easy steps for creating unique custom designs.

Introducing the Best AI Image Generator: FLUX Model's Breakthrough Enhancements

This article reviews the FLUX model and its integration with the nunchaku engine, highlighting its improvements in image generation speed and quality.

Introducing GPT-4.1: The Best AI Image Generator with Enhanced Performance

OpenAI's new GPT-4.1 models offer significant advancements in coding, instruction adherence, and long context processing.

Revolutionizing Photo Editing: Best AI Image Generator - AIEASE Transforms Your Photos in 3 Seconds!

Explore how AIEASE, the revolutionary AI photo editing tool, is transforming photo processing with its impressive features.

Discover the Best AI Image Generator: Unique Animal-Human Hybrid Art

Explore a special big model combining animals and humans, revealing absurdity in reality through a variety of artistic styles.